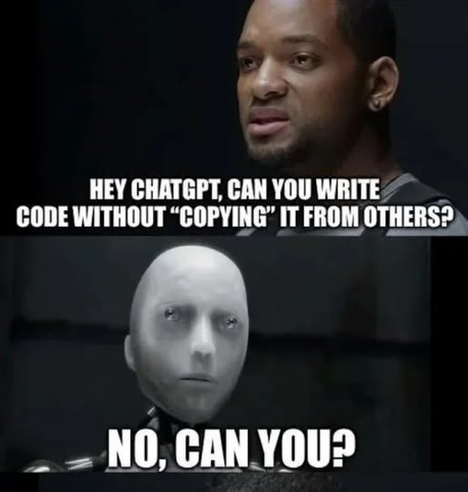

I distrust claims of LLMs reasoning and math ability.

I'm not just skeptical; the way we measure and report these things is majorly broken.

I just read a paper (http://arxiv.org/abs/2410.05229) that discusses a popular math skills dataset (GSM8K), why it's inadequate, and how LLM performance tanks on a more robust test.

Two big problems here:

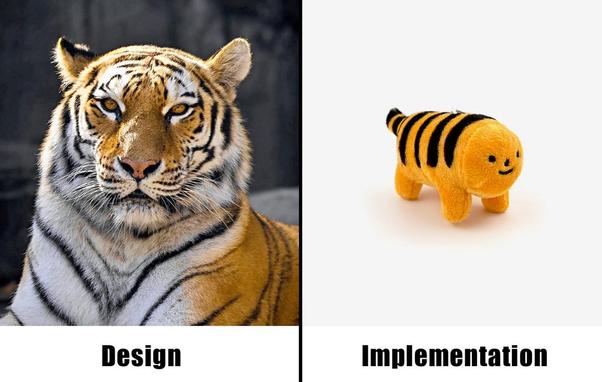

Evaluating "mathematical reasoning," should include things like: an equation works the same way regardless of what numbers you plug in. These models tend to just memorize patterns of number tokens without generalization, but GSM8K can't detect that. It's embarrassing that we proudly report success, without considering if the benchmark actually tests the thing we care about.

Worse, this whole math test has leaked into the models' training data. We know this, and can demonstrate the models are memorizing the answers. Yet, folks still report steady gains as if that means something. It's either willfully ignorant, or deceitful.